In a recent episode of a TV detective show, an AI tech dude tries to outsmart an old school musicologist by re-creating the missing part of a vintage blues recording. The professor is asked to identify which is the “real” track, compared to the AI versions. The blues expert guesses correctly within a few beats – much to the frustration of the coder.

“How did you figure it out so quickly?”

“Easy – it’s not just what the AI added, but more importantly what it left out.”

The failure of AI to fully replicate the original song (by omitting a recording error that the AI has “corrected”) is another example showing how AI lacks the human touch, does not yet have intuition, and struggles to exercise informed judgement. Choices may often be a matter of taste, but innate human creativity cannot yet be replicated.

Soon, though, AI tools will displace a lot of work currently done by composers, lyricists, musicians, producers, arrangers and recording engineers. Already, digital audio workstation (DAW) software easily enables anyone with a computer or mobile device to create, record, sample and mix their own music, without needing to read a note of music and without having to strum a chord. Not only that, the software can emulate the acoustic properties of site-specific locations, and correct out-of-tune and out-of-time recordings. So anyone can pretend they are recording at Abbey Road.

I recently blogged about how AI is presenting fresh challenges (as well as opportunities) for the music industry. Expect to see “new” recordings released by (or attributed to) dead pop stars, especially if their back catalogue is out of copyright. This is about more than exhuming preexisting recordings, and enhancing them with today’s technology; this is deriving new content from a set of algorithms, trained on vast back catalogues, directed by specific prompts (“bass line in the style of Jon Entwistle”), and maybe given some core principles of musical composition.

And it’s the AI training that has prompted the major record companies to sue two AI software companies, a state of affairs which industry commentator, Rob Abelow says was inevitable, because:

But on the other hand, streaming and automated music are not new. Sound designer and artist Tero Parviainen recently quoted Curtis Roads’ “The Computer Music Tutorial” (2023):

“A new industry has emerged around artificial intelligence (AI) services for creating generic popular music, including Flow Machines, IBM Watson Beat, Google Magenta’s NSynth Super, OpenAI’s Jukebox, Jukedeck, Melodrive, Spotify’s Creator Technology Research Lab, and Amper Music. This is the latest incarnation of a trend that started in the 1920s called Muzak, to provide licensed background music in elevators, business and dental offices, hotels, shopping malls, supermarkets, and restaurants”

And even before the arrival of Muzak in the 1920s, the world’s first streaming service was launched in the late 1890s, using the world’s first synthesizer – the Teleharmonium. (Thanks to Mark Brend’s “The Sound of Tomorrow”, I learned that Mark Twain was the first subscriber.)

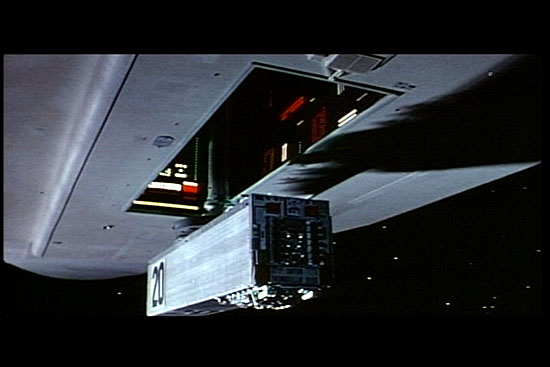

For music purists and snobs (among whom I would probably count myself), all this talk about the impact of AI on music raises questions of aesthetics as well as ethics. But I’m reminded of some comments made by Pink Floyd about 50 years ago, when asked about their use of synthesizers, during the making of “Live at Pompeii”. In short, they argue that such machines still need human input, and as long as the musicians are controlling the equipment (and not the other way around), then what’s the problem? It’s not like they are cheating, disguising what they are doing, or compensating for a lack of ability – and the technology doesn’t make them better musicians, it just allows them to do different things:

“It’s like saying, ‘Give a man a Les Paul guitar, and he becomes Eric Clapton… It’s not true.'”

(Well, not yet, but I’m sure AI is working on it…)

Next week: Some final thoughts on AI